A key component of adaptive behaviors is planning and coordination of sequences of elemental actions. Take a real-world behavior for example, to make good coffee, one has to measure the weight of beans, grind the coffee beans into desired coarseness and then adding hot water. If one first mix the beans with hot water before grinding, it would make a mess and the goal of making good coffee would be sabotaged. This ability — the sequential coordination of actions — has been studied through the lens of many disciplines: psychology, computer science and neuroscience. However, many fundamental questions remain unanswered both at the behavioral and the neural circuit level.

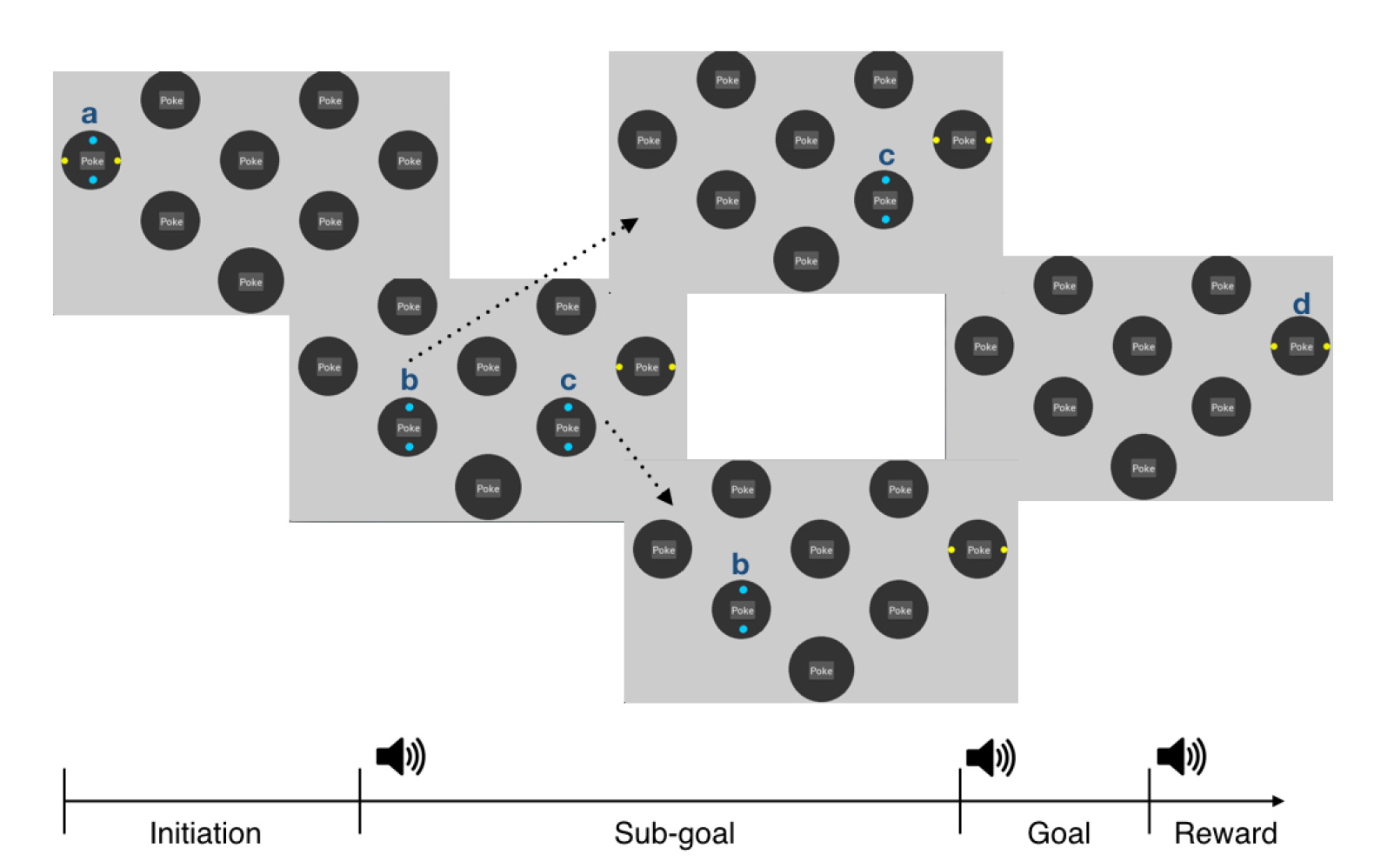

Inspired by Ribas-Fernandes et al. 2011, We designed a sequential decision-making task, in which rodents are trained to complete a series of actions in a predefined order. Specifically, they are required to poke all the blue-lit pokes (sub-goal) before the yellow-lit poke (goal) to get a reward (See below for a schematic and a rat performing the task in the video).

We controlled the total distance that rodents need to traverse to be the same while varying the distance for reaching the sub-goal, so that the reward prediction error (RPE) is fixed and only the sub-goal related prediction error (Pseudo-reward Prediction Error, PPE) is varied. We rationalized it is important for understanding whether PPE contributes to the shaping of high-level action policies, or even sub-goals or state representations in reinforcement learning. While preliminary data showed that rats can learn to make decisions based on total distances to the goal while indifferent to sub-goal distances, more works need to done on dissociating the neural substrate of PPE from RPE. We also trained virtual agents of different reinforcement models to compare with rodent behaviors.

Reach out to me if you want to know more details of the task and how we trained rats to do it.